Hybrid Massively Parallel Program (HMPP)

The Hybrid MPP version is a new parallel version developed in HyperWorks 11.0 which combines the best of the two previous parallel versions inside a unique code.

Benefits

This new approach allows reaching an impressive level of scalability. Radioss HMPP can scale up to 512 cores and more for a real performance breakthrough.

Radioss HMPP is independent of the computer architecture, more flexible and efficiently adapted to any hardware resources. It can run on distributed memory machines, shared memory machines, workstation cluster, or a high performance computation cluster. It can better exploit the inside power of highly multi-core machine and optimize the software according to the hardware.

It decreases installation and maintenance costs by having a unique parallel version instead of two types of executables.

It improves the quality of the code in term of numerical results by having a full convergence between SMP and SPMD results. /PARITH/ON provides a unique answer independent of the number of SPMD domains and the number of SMP threads used.

Hybrid Usage Example

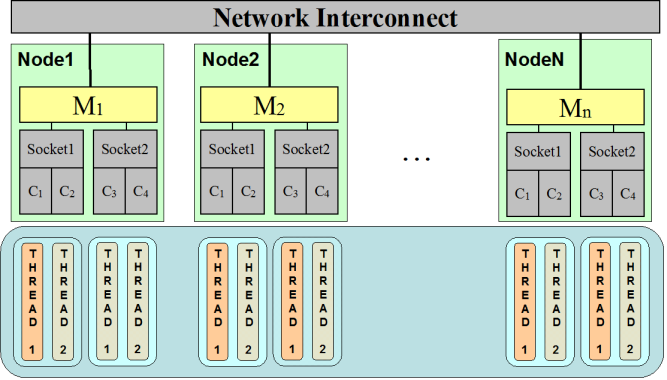

Figure 1. Hybrid Version Run with 2 SMP Threads per SPMD Domains

With the Hybrid version, it is easy to optimize the software to run on such complex architecture. Figure 1 shows that each SPMD domain is computed by a different processor. The number of threads per domain is set to the number of cores per processor (two).

So, each processor computes a SPMD domain using two SMP threads, one per core.

Making it Work

For SPMD version, Radioss Starter divides the model into several SPMD domains and writes multiple RESTART files.

Then, mpirun command is used to start all the SPMD programs. Each

program computes a SPMD domain using the number of SMP threads set. Indeed, each MPI

program is a SMP parallel program. The management of computation at the frontiers of

the domains remains and it is necessary to communicate some information between

programs using MPI.

Execution Example

Radioss Starter is run from the command line. Here, the

number of SPMD domains (Nspmd) with option -nspmd

16 is specified:

./s_11.0_linux64 -nspmd 16 -i ROOTNAME_0000.rad

The number of SMP threads (Nthread) is set through the use of

environment variable OMP_NUM_THREADS:

setenv OMP_NUM_THREADS 2

Then, Radioss Engine is run using mpirun command:

mpirun -np 16 ./e_11.0_linux64_impi -i ROOTNAME_0001.rad-npvalue ofmpirunmust match-nspmdvalue- The total number of processes is equal to

Nspmd*Nthread(32 in this example)

Recommended Setup

As seen in the example above, a good rule is to set the number of SPMD domains equal to the number of sockets and to set the number of SMP threads equal to the number of cores per socket.

With a low number of cores (below 32), a pure SPMD run might be more effective but the performance gap should be small if the setup explained above is respected.

With a very high number of cores (1024), it is possible to increase the number of SMP threads up to the number of cores per node and set only one SPMD domain per node to maximize performance if the limit of scalability of the interconnect network is reached.