AcuRun

Script to run AcuPrep and AcuSolve in scalar or parallel environments.

Syntax

acuRun [options]

Type

AcuSolve Solver Program Command

Description

AcuRun is a script that facilitates running a problem in the scalar and all supported parallel environments. This script is a one-step process that executes AcuPrep to preprocess the user input file, AcuView to calculate radiation view factors if needed, and AcuSolve to solve the problem.

For a general description of option specifications, see Command Line Options and Configuration Files.

Parameters

- help or h (boolean)

- If set, the program prints a usage message and exits. The usage message includes all available options, their current values, and the place where each option is set.

- problem or pb (string)

- The name of the problem is specified via this parameter. This name is used to generate and recognize internal files.

- problem_directory or pdir (string)

- The home directory of the problem; this is where the user input files reside. This option is needed by certain parallel processing packages. If problem_directory is set to ".", namely the Unix current directory, it is replaced by the full address of the current directory, as provided by the Unix getcwd command.

- working_directory or dir (string)

- All internal files are stored in this directory. This directory does not need to be on the same file system as the input files of the problem.

- file_format or fmt (enumerated) [binary]

- Format of the internal files:

- ascii

- ASCII files

- binary

- Binary files

- hdf

- Hierarchical data format (HDF5)

- process_modules or do (enumerated) [all]

- Specifies which modules to execute

- prep

- Execute only AcuPrep

- view

- Execute only AcuView

- solve

- Execute only AcuSolve. This requires that -acuPrep and AcuView (if necessary) have previously been executed.

- prep-solve

- Execute only AcuPrep and AcuSolve. This requires that AcuView has previously been executed.

- all

- Execute AcuPrep, AcuView, and AcuSolve

- install_smpd or ismpd (boolean) [FALSE]

- If set, this parameter installs the appropriate message passing daemon and then exits. Valid only for mp=impi, mp=mpi and mp=pmpi when running on Windows platforms. Note that a message passing daemon is only necessary for mp=impi and mp=pmpi when running across multiple hosts. For mp=mpi, it is always necessary to have the message passing daemon service running before executing the simulation. See the Altair Simulation Installation Guide for more details.

- test_mpi or tmpi (boolean) [FALSE]

- If set, run a test of MPI only by executing the Pallas MPI benchmark.

- log_output or log (boolean) [TRUE]

- If set, the printed messages of AcuRun and the modules executed by it are redirected to the .log file -problem.run.Log; where run is the ID of the current run. Otherwise, the messages are sent to the screen and no logging is performed.

- append_log or append (boolean) [FALSE]

- If log_output is set, this parameter specifies whether to create a new .log file or append to an existing one.

- tail_log or tlog (boolean) [FALSE]

- If tail_log is activated, the AcuTail utility is launched and attached to the .log file of the current run once the simulation begins. This option is intended for interactive runs where you want to monitor the .log file as it is written to disk.

- launch_probe or lprobe (boolean) [FALSE]

- If launch_probe is activated, the AcuProbe utility is launched and attached to the .log file of the current run once the simulation begins. This option is intended for interactive runs where you want to plot residuals and integrated solution quantities as the simulation progresses.

- translate_output_to or tot (enumerated) [none]

- Automatically executes AcuTrans at the

conclusion of the run to convert nodal output into the desired

visualization format.

- none

- No translation is performed

- table

- Output is translated to table format

- cgns

- Output is translated to CGNS format

- ensight

- Output is translated to EnSight format

- fieldview

- Output is translated to FieldView format

- h3d

- Output is translated to H3D format

- acuoptistruct_type or aos (enum) [none]

- After a run is completed, create an OptiStruct input deck with type: none,ctnl,tnl,tl,snl,sl.

- input_file or inp (string)

- The input file name is specified via this option. If input_file is set to _auto, problem.inp is used.

- restart or rst (boolean) [FALSE]

- Create and run a simple restart file.

- fast_restart or frst (boolean) [FALSE]

- Perform a fast restart. Using this type of restart, the AcuPrep stage is skipped and the solver resumes from the last restart file that was written for the run that is being restarted. Note that the number of processors must be held constant across the restart when the fast_restart parameter is used.

- fast_restart_run_id or frstrun (integer) [0]

- The run ID to use as the basis for the fast_restart. When this parameter is set to 0, the last available run ID is used.

- fast_restart_time_step_id or frstts (integer) [FALSE]

- The time step to use as the basis for the fast_restart. When this option is set to 0, the last available time step is used.

- echo_input or echo (boolean) [TRUE]

- Echo the input file to the file problem.run.echo; where run is the ID of the current run.

- echo_precedence or prec (boolean) [FALSE]

- Echo the resolved boundary condition precedence, if any, to the file problem.bc_warning.

- echo_help or eh (boolean) [FALSE]

- Echo the command help, that is, the results of acuRun -h, to the .log file.

- run_as_tets or tet (boolean) [FALSE]

- Run the problem as all tet mesh. All continuum fluid and solid elements are split into tetrahedron elements, and all thermal shell elements are converted to wedge elements. The surface facets are split into triangles. This option typically reduces the memory and CPU usage of AcuSolve.

- auto_wall_surface_output or awosf (string) [TRUE]

- Create surface output for auto_wall surfaces.

- user_libraries or libs (string)

- Comma-separated list of user libraries. These libraries contain the user-defined functions and are generated by acuMakeLib or acuMakeDll.

- viewfactor_file_name or vf (string)

- Name of the view factor file. If viewfactor_file_name is set to _auto, an internal name is used. This option is useful when you want to save the computed view factors for another simulation.

- message_passing_type or mp (enumerated) [impi]

- Type of message passing environment used for parallel processing:

- none

- Run in single-processor mode

- impi

- Run in parallel on all platforms using Intel MPI

- pmpi

- Run in parallel on all platforms using Platform MPI

- mpi

- Run in parallel on all platforms using MPICH

- msmpi

- Run in parallel on Windows using Microsoft MPI

- hpmpi

- Run in parallel on all platforms using Platform MPI. Note that HP-MPI has been replaced by Platform MPI and this option is only included for backward compatibility. Identical to the setting mp=pmpi.

- openmp

- Run in parallel on all platforms using OpenMP

Note: Select impi, msmpi or openmp for message_passing_type (mp) when you are running a simulation with UDF on 64-bit Windows. - num_processors or np (integer) [1]

- This parameter specifies the total number of threads to be used. The number of physical processors used is num_processors divided by num_threads. If message_passing_type is set to none, num_processors is reset to 1, and vice versa.

- num_threads or nt (string) [_auto]

- This parameter specifies the number of threads per processor to be used. If num_threads>1, this option converts an MPI run to a hybrid MPI/OpenMP run. If this option is set to _auto, AcuRun automatically detects the number of cores on the machine and sets the thread count accordingly. This forces AcuSolve to use shared memory parallel message passing whenever possible.

- num_actual_solver_threads or nast (integer) [0]

- Number of threads the solver actually uses for each process. Deactivated by default. Activate to overwrite nThreads but use the number of processes and mesh partitioning style set by nProcs and nThreads.

- num_subdomains or nsd (integer) [1]

- This parameter specifies the number of subdomains into which the mesh is to be decomposed. If num_subdomains is less than num_processors, it is reset to num_processors. Hence, setting num_subdomains to 1 allows you to control both the number of subdomains and processors through the num_processors parameter.

- host_lists or hosts (string) [_auto]

- List of machines (hosts) separated by commas. This list may exceed

num_processors; the extra hosts are ignored.

This list may also contain fewer entries than

num_processors. In this case, the hosts are

populated in the order that they are listed. The process is then

repeated, starting from the beginning of the list, until each process

has been assigned to a host. Multiple process may be assigned to a

single host in one entry by using the following syntax:

host:num_processes, where host is the

host name, and num_processes is the number of processes

to place on that machine. When this option is set to _auto, the local host name is used.

The following examples illustrate the different methods of assigning the execution hosts for a parallel run:

Example 1:acuRun -np 6 -hosts node1,node2Result: The node list is repeated to use the specified number of processors. The processes are assigned: node1, node2, node1, node2, node1, node2

Example 2:acuRun -np 6 -hosts node1,node1,node2,node2,node1,node2Result: The processes are assigned: node1, node1, node2, node2, node1, node2

Example 3:acuRun -np 6 -hosts node1:2,node2:4Result: The processes are assigned: node1, node1, node2, node2, node2, node2

- view_message_passing_type or vmp (enumerated) [none]

- Type of message passing environment used for parallel processing of view

factors:

- none

- Run in single-processor mode

- impi

- Run in parallel on all platforms using Intel MPI

- pmpi

- Run in parallel on all platforms using Platform MPI

- mpi

- Run in parallel on all platforms using MPICH

- msmpi

- Run in parallel on Windows using Microsoft MPI

- hpmpi

- Run in parallel on all platforms using Platform MPI. Note that HP-MPI has been replaced by Platform MPI and this option is only included for backward compatibility. Identical to the setting mp=pmpi.

- num_view_processors or vnp (integer) [0]

- This parameter specifies the number of processors to be used for view factor computation. If view_num_processors is 0, num_processors is assumed. If view_message_passing_type is set to none, the view_num_processors is reset to 1, and vice versa.

- view_host_lists or vhosts (string) [_auto]

- List of machines (hosts), separated by commas, to run the view factor

computation. This list may exceed num_processors;

the extra hosts are ignored. This list may also contain fewer entries

than num_processors. In this case, the hosts are

populated in the order that they are listed. The process is then

repeated, starting from the beginning of the list, until each process

has been assigned to a host. Multiple process may be assigned to a

single host in one entry by using the following syntax:

host:num_processes, where host is the

host name, and num_processes is the number of processes

to place on that machine. When this option is set to _auto, the host_lists parameter is used.

The following examples illustrate the different methods of assigning the execution hosts for a parallel run:

Example 1:acuRun -np 6 -vhosts node1,node2Result: The node list is repeated to use the specified number of processors. The processes are assigned: node1, node2, node1, node2, node1, node2

Example 2:acuRun -np 6 -vhosts node1,node1,node2,node2,node1,node2Result: The processes are assigned: node1, node1, node2, node2, node1, node2

Example 3:acuRun -np 6 -vhosts node1:2,node2:4Result: The processes are assigned: node1, node1, node2, node2, node2, node2

- pbs (boolean) [FALSE]

- Set parallel data, such as np and hosts, from PBS variable PBS_NODEFILE. Used when running AcuSolve through a PBS queue.

- pbs_attach (boolean) [FALSE]

- When toggled on, this parameter enables tighter integration with PBS schedulers. The AcuSolve processes are launched using the pbs_attach wrapper script that enables pausing, resuming and closer tracking of the batch processes.

- lsf (boolean) [FALSE]

- Set parallel data, such as np and hosts, from LSF variable LSB_HOSTS. Used when running AcuSolve through a LSF queue.

- sge (boolean) [FALSE]

- Set parallel data, such as np and hosts, from the $TMPDIR/machines SGE file. Used when running AcuSolve through a Sun Grid Engine queuing system.

- nbs (boolean) [FALSE]

- Set parallel data, such as np and hosts, from NBS var NBS_MACHINE_FILE.

- ccs (boolean) [FALSE]

- Set parallel data, such as np and hosts, from the CCP_NODES environment variable set by the CCS scheduler. Used when running AcuSolve through a Microsoft -Compute Cluster Server job scheduler.

- slurm (boolean) [FALSE]

- Set parallel data, such as np and hosts, from output of the "scontrol show hostnames" command. Slurm Workload Manager, formerly known as Simple Linux Utility for Resource Management or SLURM, is an open source cluster management and job scheduling system for large and small Linux clusters.

- numactl (boolean) [FALSE]

- Use the Linux operating system command numactl to bind processes to cores. This option is only valid when running on Linux platforms.

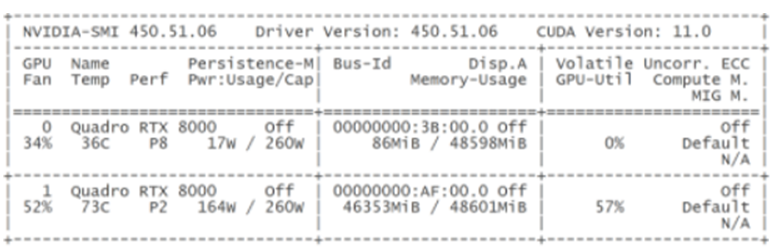

- run_with_gpu or gpu (boolean) [FALSE]

- Enabling GPU acceleration of the solver GPU can speed up the linear solvers, the most run time-consuming part in steady-state simulations. Currently only single GPU device is supported.

- gpuid (integer) [0]

- Choose specific GPU device when multiple GPUs are available. For a system with multiple Nvidia or AMD GPUs installed, use nvidia-smi or rocm-smi to list all of the available devices. gpuid specifies which device to use.

- gputype (enumerated) [cudasp]

- Specify GPU type Nvidia (cudasp) or AMD Radeon (rocmsp): cudasp, rocmsp.

- run_with_gpu_mesh or meshgpu (boolean) [TRUE]

- Solve mesh deformation equation using GPU. Used with the -gpu option.

- run_with_gpu_temp or tempgpu (boolean) [TRUE]

- Solve thermal equation using GPU. Used with the -gpu option.

- run_with_gpu_flow or flowgpu (boolean) [TRUE]

- Solve flow equation using GPU. Used with the -gpu option.

- run_with_gpu_sa or sagpu (boolean) [TRUE]

- Solve Spalart-Allmaras turbulence model using GPU. Used with the -gpu option.

- acuprep_executable or acuprep (string)

- Full path of the AcuSolve executable.

- acuview_executable or acuview (string)

- Full path of the AcuSolve executable.

- acusolve_executable or acusolve (string)

- Full path of the AcuSolve executable.

- acutrans_executable or acutrans (string)

- Full path of the AcuTrans executable, used with the -translate_output_to option.

- acuoptistruct_executable or acuoptistruct (string)

- Full path of the acuOptiStruct executable.

- acutail_executable or acutail (string)

- Full path of the AcuTail executable, used with the -tail_log option.

- acuprobe_executable or acuprobe (string)

- Full path of the AcuProbe executable, used with the -launch_acuprobe option.

- acupmb_executable or acupmb (string)

- Full path of the AcuPmb executable.

- mpirun_executable or mpirun (string)

- Full path to mpirun, mpimon, dmpirun or prun executable used to launch an MPI job. If _auto, executable is determined internally. When using mp=pmpi, you can also set this option to _system, which will use the system level installation of Platform MPI.

- mpirun_options or mpiopt (string)

- Arguments to append to the mpirun command that is used to launch the parallel processes. When this option is set to _auto, AcuRun internally determines the options to append to the mpirun command.

- remote_shell or rsh (string)

- Remote shell executable for MPI launchers. Usually this is rsh (or remesh on HP-UX) but can be set to ssh if needed. When this option is set to _auto, executable is determined internally.

- automount_path_remove or arm (string) [_none]

- Automount path remove. If automount_path_remove is set to _none, this option is ignored. See below for details.

- automount_path_replace or arep (string) [_none]

- Automount path replacement. If automount_path_replace is set to _none, this option is ignored. See below for details.

- automount_path_name or apath (string) [_none]

- Automount path name. If automount_path_name is set to _none, this option is ignored. See below for details.

- lm_daemon or lmd (string)

- Full address of the network license manager daemon, acuLmd, that runs on the server host. When this option is set to _auto, the value is internally changed to $ACUSIM_HOME/$ACUSIM_MACHINE/bin/acuLmd. This option is only used when lm_service_type = classical.

- lmtype or lm_service_type (enumerated) [hwu]

- Type of the license manager service:

- hwu

- Altair Units licensing

- token

- Token based licensing

- classical

- Classical AcuSim style licensing

- lm_server_host or lmhost (string)

- Name of the server machine on which the network license manager runs. When this option is set to _auto,, the local host name is used. This option is only used when lm_service_type = classical.

- lm_port or lmport (integer) [41994]

- TCP port number that the network license manager uses for communication. This option is only used when lm_service_type = classical.

- lm_license_file or lmfile (string) [_auto]

- Full address of the license file. This file is read frequently by the network license manager. When this option is set to _auto, the value is internally changed to $ACUSIM_HOME/$ACUSIM_MACHINE/license.dat. This option is only used when lm_service_type = classical.

- lm_checkout or lmco (string) [_auto]

- Full address of the license checkout co-processor, AcuLmco. This process is spawned by the solver on the local machine. When this option is set to _auto, the value is internally changed to $ACUSIM_HOME/$ACUSIM_MACHINE/bin/acuLmco. This option is only used when lm_service_type = classical.

- lm_queue_time or lmqt (integer) [3600]

- The time, in seconds, to camp on the license queue before abandoning the wait. This option is only used when lm_service_type = classical.

- lm_type or lmt (enumerated) [classical]

- Type of license checkout, used with

lm_service_type=classical:

- classical

- Classical

- light

- Light

- external_code_host or echost (string) [_auto]

- External code host name for establishing socket connection.

- external_code_port or ecport (integer) [0]

- External code port number for establishing socket connection.

- external_code_wait_time or ecwait (real) [3600]

- How long to wait for the external code to establish socket connection.

- external_code_echo or ececho (boolean) [FALSE]

- Echo messages received from external code for stand-alone debugging.

- line_buff or lbuff (boolean) [FALSE]

- Flush standard output after each line of output.

- print_environment_variables or printenv (boolean) [FALSE]

- Prints all the environment variables in AcuRun and those read by AcuSolve.

- mpitune or tune (boolean) [FALSE]

- When toggled on, this parameter will run Intel's mpitune application to optimize message passing speed. Only available when using mp=impi.

- verbose or v (integer) [1]

- Set the verbose level for printing information to the screen. Each higher verbose level prints more information. If verbose is set to 0, or less, only warning and error messages are printed. If verbose is set to 1, basic processing information is printed in addition to warning and error messages. This level is recommended. verbose levels greater than 1 provide additional information useful only for debugging.

Examples

Given the input file channel.inp of problem channel, the simplest way to solve this problem in scalar mode is:

acuRun -pb channel -np 1or alternatively, place the options in the configuration file Acusim.cnf as

problem= channel

num_processors= 1and invoke AcuRun as:

acuRunIn the above example, AcuRun executes AcuPrep to read and process the user input file, bypasses AcuView since there is no radiation data, and runs

AcuSolve in scalar mode.

To execute AcuPrep, AcuView, and AcuSolve separately and log the output messages, issue the following commands:

acuRun -do prep -log

acuRun -do view -log -append

acuRun -do solve -log -appendwhere the last two invocations append to the .log file created by the first invocation. For problems with a large number of radiation surfaces, AcuView can be very expensive to run. This step can be skipped on the second and successive runs, if the geometry has not changed, by executing:

acuRun -do prep-solve -logTo run the channel problem in parallel on 10 processors using MPI, issue the following command:

acuRun -pb channel -mp mpi -np 10Here AcuRun runs AcuPrep to prepare the data, sets up all MPI related data and runs acuSolve-mpi via mpirun. Note that the message_passing_type (mp) option is typically set by the system administrator in the system Acusim.cnf file. To run the channel problem in parallel on four processors with six threads per processor using a hybrid MPI/OpenMP strategy, issue the following command:

acuRun -pb channel -mp impi -np 6Here AcuRun runs AcuPrep to prepare the data, sets the MPI related data and the OpenMP parallel environment, and runs AcuSolve.

acuRun -pb channel -ismpd -mp impiacuRun -pb channel -mp impi -np 2 -nt 1 -hosts winhost1,winhost2When AcuRun launches the parallel job, it first checks for the presence of the appropriate message passing daemon and issues an error if the service is not found.

To list the GPU devices on your system, issue the command nvidia-smi.

Figure 1.

You can choose any of the GPU cards by typing

acuRun -gpu -gpuid 0 (when you want to use the GPU card ID 0).

acuRun -gpu -gpuid 1 (for GPU card ID 1)

By default, every equation that is supported by GPU will be solved on GPU if GPU acceleration is enabled by specifiying "-gpu". Alternatively, you can disable GPU acceleration of individual equations. For example, in an ALE simulation with mesh movement, specifying "-no_meshgpu" instructs the solver to solve the mesh movement equation on CPU. Those options are useful if the mesh size of the simulation reaches the limit of what the GPU RAM size can allow.

The run_as_tet parameter may be used to split the mesh into an all tetrahedron mesh. For example,

acuRun -pb channel -tetThis will typically reduce the memory and CPU usages of AcuSolve, without reducing the accuracy. For fluid and solid media, brick elements are split into five or six tets, wedges are split into three tets, pyramids are split into two tets, and tets are kept as is. For thermal shells, brick elements are split into two wedges and wedge elements are kept as is. All surface facets are split into corresponding triangles. The split is consistent and conformal, in the sense that a quad face common to two elements is split in the same direction, and two quad faces with pair-wise periodic nodes have the same diagonal split. Moreover, the algorithm attempts to reduce large angles. The user element and surface numbers are modified by multiplying the user-given numbers by 10 and adding the local tet number. This only effects user numbers passed to user-defined functions.

When running a problem with enclosure radiation, AcuRun detects this fact and runs AcuView by default. Again by default, the view factors are stored in the working directory under an internal name. The name and location of this file may be changed using the viewfactor_file_name parameter. This is useful when multiple problems are run with the same geometry, radiation surfaces and agglomeration. For example assume input files test1.inp and test2.inp have the same geometry, radiation surfaces, and agglomeration parameters. In this case, to save CPU time they may be executed as:

acuRun -inp test1.inp -vf test.vf -do all

acuRun -inp test2.inp -vf test.vf -do prep-solveThe restart parameter creates and runs the simplest restart input file:

RESTART{}

RUN{}The fast_restart parameter is a special form of restart that bypasses AcuPrep entirely. When specifying this type of restart, the solver simply resumes from the run specified in the fast_restart_run_id and fast_restart_time_step with no modifications to the solver settings. The source run for the fast_restart must utilize the same number of processors as the current run. It should be noted that a limited number of modifications can be made when using the fast_restart parameter by using the AcuModSlv script to modify the solver directives file that is written into the working directory. This is an advanced option and is rarely used.

The parameters acuprep_executable, acuview_executable and acusolve_executable are advanced parameters and should rarely be changed. They are used to access executables other than the current one. If used, the version number of all modules must be the same.

Running AcuSolve and/or AcuView in distributed memory parallel requires that the problem and working directories be accessible by all involved machines. Moreover, these directories must be accessible by the same name. In general, working_directory does not pose any difficulty, since it is typically specified relative to the problem_directory. However, problem_directory requires special attention.

By default, problem_directory is set to the current Unix directory ".". AcuRun translates this address to the full Unix address of the current directory. It then performs three operations on this path name in order to overcome some potential auto mount difficulties: first, if the path name starts with the value of automount_path_remove, this starting value is removed. Second, if the path name starts with from_path, it is replaced by to_path, where the string "from_path,to_path" is the value of automount_path_replace parameter. Third, if the path name does not start with the value of automount_path_name, it is added to the start of the path name. These operations are performed only if problem_directory is not explicitly given as a command line option to AcuRun, and also if the option associated with each operation is not set to _none. If problem_directory is explicitly given as a command line option, you are responsible for taking care of all potential automount problems.

The parameters lm_daemon, lm_server_host, lm_port, lm_license_file, lm_checkout, and lm_queue_time are used for checking out a license from a network license manager when using classical Acusim licensing (lm_service_type = classical). These options are typically set once by the system administrator in the installed system configuration file, Acusim.cnf. Given the proper values, AcuSolve automatically starts the license manager if it is not already running. For a more detailed description of these parameters consult acuLmg. This executable is used to start, stop, and query the license manager. These options are ignored when lm_service_type = hwu.

There are four options that control how AcuSolve interacts with an external code for Direct Coupling Fluid Structural Interaction problems. The parameter external_code_host specifies a host name for establishing the socket connection and overwrites the socket_host parameter in the EXTERNAL_CODE command. Similarly, external_code_host specifies the port for the socket connection and overwrites socket_port in EXTERNAL_CODE. The maximum amount of time to wait for the external code connection is given by external_code_wait. The parameter external_code_echo echos the messages received by the external code into a .ecd file. This file may subsequently be given as the value of fifo_receive_file of the EXTERNAL_CODE command with fifo type to perform a stand-alone run of AcuSolve.

AcuRun duplicates many of the AcuPrep, AcuView, and AcuSolve options. However, there are some advanced options in these modules that are not covered by AcuRun; See AcuPrep, AcuView, and AcuSolve for complete lists of their options. These options may be specified in the configuration files before running AcuRun.